One of Facebook’s first moves as Meta: Teaching robots to touch and feel

Meta AI, previously Facebook AI Research, is working on touch sensors that can gather information about how objects feel in the real world.

Last week, Mark Zuckerberg officially announced that his company was changing its name from Facebook to Meta, with a prominent new focus on creating the metaverse.

A defining feature of this metaverse will be creating a feeling of presence in the virtual world. Presence could mean simply interacting with other avatars and feeling like you are immersed in a foreign landscape. Or, it could even involve engineering some sort of haptic feedback for users when they touch or interact with objects in the virtual world. (A primitive form of this is when your controller used to vibrate after you hit a ball in Wii tennis).

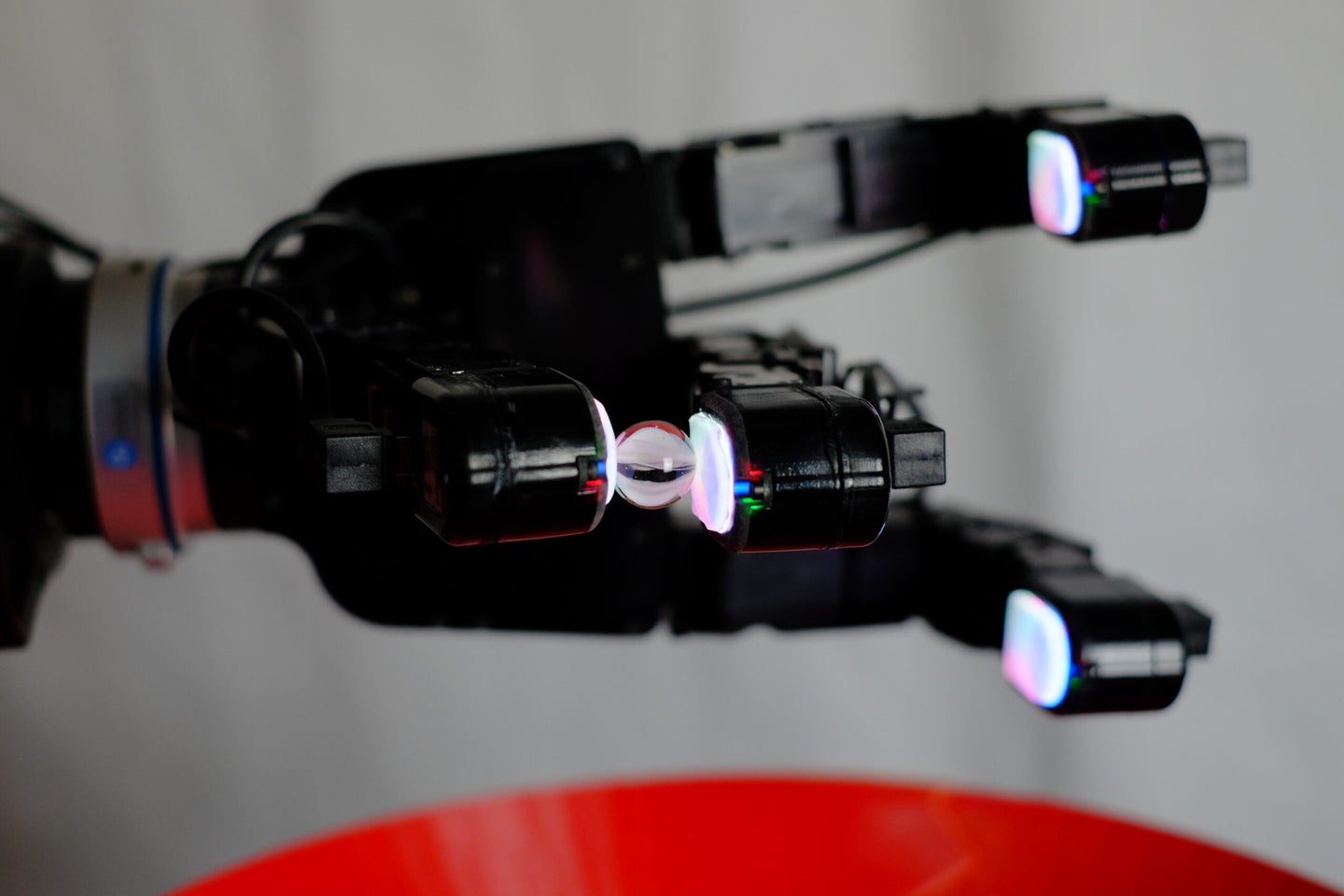

As part of all this, a division of Meta called Meta AI wants to help machines learn how humans touch and feel by using a robot finger sensor called DIGIT, and a robot skin called ReSkin.

“We designed a high-res touch sensor and worked with Carnegie Mellon to create a thin robot skin,” Meta CEO Mark Zuckerberg wrote in a Facebook post today. “This brings us one step closer to realistic virtual objects and physical interactions in the metaverse.”

Meta AI sees robot touch as an interesting research domain that can further help artificial intelligence be better by receiving feedback from the environment. By working on this research centered around touch, Meta AI wants to both push the field of robotics further, and also possibly use this tech to incorporate a sense of touch into the metaverse down the road.

[Related: Facebook might rebrand as a ‘metaverse’ company. What does that even mean?]

“Robotics is the nexus where people in AI research are trying to get the full loop of perception, reasoning, planning and action, and getting feedback from the environment,” says Yann LeCun, chief AI scientist at Meta. Going further, LeCun thinks that being able to understand how real objects feel might be vital context for AI assistants (like Siri or Alexa) to know if they were to one day help humans navigate around an augmented or virtual world.

Touch with DIGIT

The way that machines sense the world currently is not very intuitive, and there is room for improvement. For starters, machines have only been really trained on vision and audio data, leaving them at a loss for receiving any other types of sensory data, like taste, touch and smell.

That’s different from how a human works. “From a human perspective, we extensively use touch,” Meta AI research scientist Roberto Calandra, who works with DIGIT, says. “Historically, in robotics, touch has always been considered a sense that would’ve been extremely useful to have. But because of technical limitations, it has been hard to have a widespread use of touch.”

The goal of DIGIT, the research team wrote in a blog post, is to create a compact robot fingertip that is cheap and able to withstand wear and tear from repeated contact with surfaces. They also need to be sensitive enough to measure properties like surface features and contact forces.

When humans touch an object, they can gauge the general shape of what they’re touching and recognize what it is. DIGIT tries to mimic this through a vision-based tactile sensor.

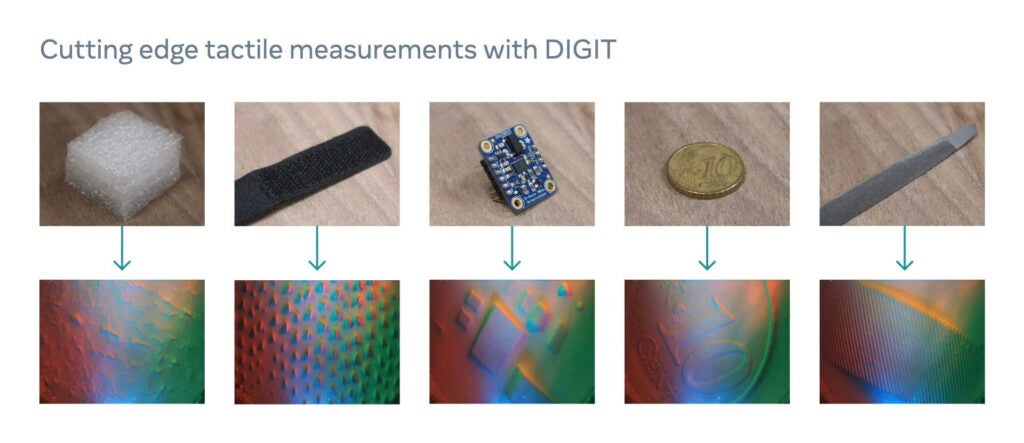

DIGIT consists of a gel-like silicone pad shaped like the tip of your thumb that sits on top of a plastic square. That plastic container houses sensors, a camera, and PCB lights that line the silicone. Whenever you touch an object with the silicone gel, which resembles a disembodied robot finger, it creates shadows, or changes in color hues in the emerging image that is recorded by the camera. In other words, the touch it is sensing is expressed visually.

“What you really see is the geometrical deformation and the geometrical shape of the object you are touching,” Calandra says. “And from this geometrical deformation, you can also infer the forces that are being applied on the sensor.”

A 2020 paper that open-sourced the tech behind DIGIT provided a few examples of what different objects would look like with the DIGIT touch. DIGIT can capture the imprint of objects it touches. It can feel the small contours on the face of a coin, and the jagged, individual teeth on a small piece of velcro.

But now, the team aims to gather more data on touch, and they can’t do it alone.

That’s why Meta AI has partnered with MIT spin-off GelSight to commercially produce these sensors and sell them to the research community. The cost of the sensors is about $15, Calandra says, which is orders of magnitude cheaper than most of the touch sensors available commercially.

[Related: An exclusive look at Facebook’s efforts to speed up MRI scans using artificial intelligence]

As a companion to the DIGIT sensor, the team is also opening up a machine learning library for touch processing called PyTouch.

DIGIT’s touch sensor can reveal a lot more than just looking at the object. It can provide information about the object’s contours, textures, elasticity or hardness, and depth of force that can be applied to it, says Mike Lambeta, a hardware engineer working on DIGIT at Meta AI. An algorithm can combine that information and provide feedback to a robot on how best to pick up, manipulate, move, and grasp different objects from eggs to marbles.

Feel with ReSkin

A robot “skin” that Meta AI created with Carnegie Mellon researchers in some ways complements the DIGIT finger.

ReSkin is an inexpensive and replaceable touch-sensing skin that uses a learning algorithm to help it be more universal, meaning that any robotics engineer could in theory easily integrate this skin onto their existing robots, Meta AI researchers wrote in a blog.

It’s a 2-3 mm thick slab that can be peeled off and replaced when it wears down. It can be tacked onto different types of robot hands, tactile gloves, arm sleeves, or even on the bottom of dog booties, where it can collect touch data for AI models.

ReSkin has an elastomer surface which is rubbery and bounces back when you press on it. Embedded in the elastomer are magnetically charged-particles.

When you apply force to the “skin,” the elastomer will deform, and as it deforms, it creates a displacement in magnetic flux, which is read out by the magnetometers under the skin. The force is registered in three directions, mapped out in the x, y, and z axis.

The algorithm then converts these changes in magnetic flux into force. It also considers the properties of the skin itself (how soft it is, how much it bounces back) and matches it with the force. Researchers have taught the algorithm to subtract out the ambient magnetic environment, which are like the magnetic signature of individual robots, or the magnetic fields at a certain location on the globe.

“What we have become good at in computer vision and machine learning now is understanding pixels and appearances. Common sense is beyond pixels and appearances,” says Abhinav Gupta, Meta AI research manager on ReSkin. “Understanding the physicality of objects is how you understand the setting.”

LeCun teased that it’s possible that the skin could be useful for haptics in the virtual environment, so that when you touch an object you might get some feedback that tells you what this object is. “If you have one of those pieces of artificial skin,” he says, “then, combined with actuators, you can reconstruct the sensation on the person.”

Certainly, both DIGIT and ReSkin are only preliminary models of what touch sensors could be.

“Biologically, human fingers allow us to really capture a lot of sensor modality, not only geometry deformation but also temperature, vibrations,” says Calandra. “The sensors in DIGIT only give us, in this moment, the geometry. But ultimately, we believe we will need to incorporate these other modalities.”

Additionally, the gel tip mounted on a box, Calandra acknowledges, is not a perfect form factor. There’s more research needed on how to create a robot finger that is as dextrous as a human finger. “Compared to a human finger, this is limited in the sense that my finger is curved, has sensing in all directions,” he says. “This only has sensing on one side.”

Hopes for a multi-sensory enabled “smart” metaverse

Meta has also been boosting the sensory library for its AI to support potentially useful tools in the metaverse. In October, Meta AI unveiled a new project called Ego4D, which captured thousands of hours of first-person videos that could one day teach artificial intelligence-powered virtual assistants to help users remember and recall events.

[Related: The big new updates to Alexa, and Amazon’s pursuit of ambient AI]

“One big question we’re still trying to solve here is how you get machines to learn new tasks as efficiently as humans and animals,” says LeCun. Machines are clumsy, and for the most part, very slow to learn. For example, using reinforcement learning to train a car to drive itself has to be done in a virtual environment because it would have to drive for millions of hours, cause countless accidents, and destroy itself multiple times before it learns its way around. “And even then, it probably wouldn’t be that reliable,” he adds.

Humans, however, can learn to drive in just a few days because by our teenage years, we have a pretty good model of the world. We know about gravity, and we know the basic physics of cars.

“To get machines to learn that model of the world and predict different events and plan what’s going to happen as a consequence of their actions is really the crux of the department here,” LeCun says.

Similarly, this is an area that needs to be fleshed out for the AI assistant that would one day interact with humans naturally in a virtual environment.

“One of the long term visions of augmented reality, for example, is virtual agents that can live in your augmented reality glasses or your smartphone or your laptop,” LeCun says. “It helps you in your daily life, as a human assistant would do, and that system will have to have some degree of understanding of how the world works, some degree of common sense, and be smart enough not to be frustrating to talk to. That is where all of this research leads in the long run.”