How an AI managed to confuse humans in an imitation game

Here’s how “hints of humanness” may have come into play in the Italian experiment.

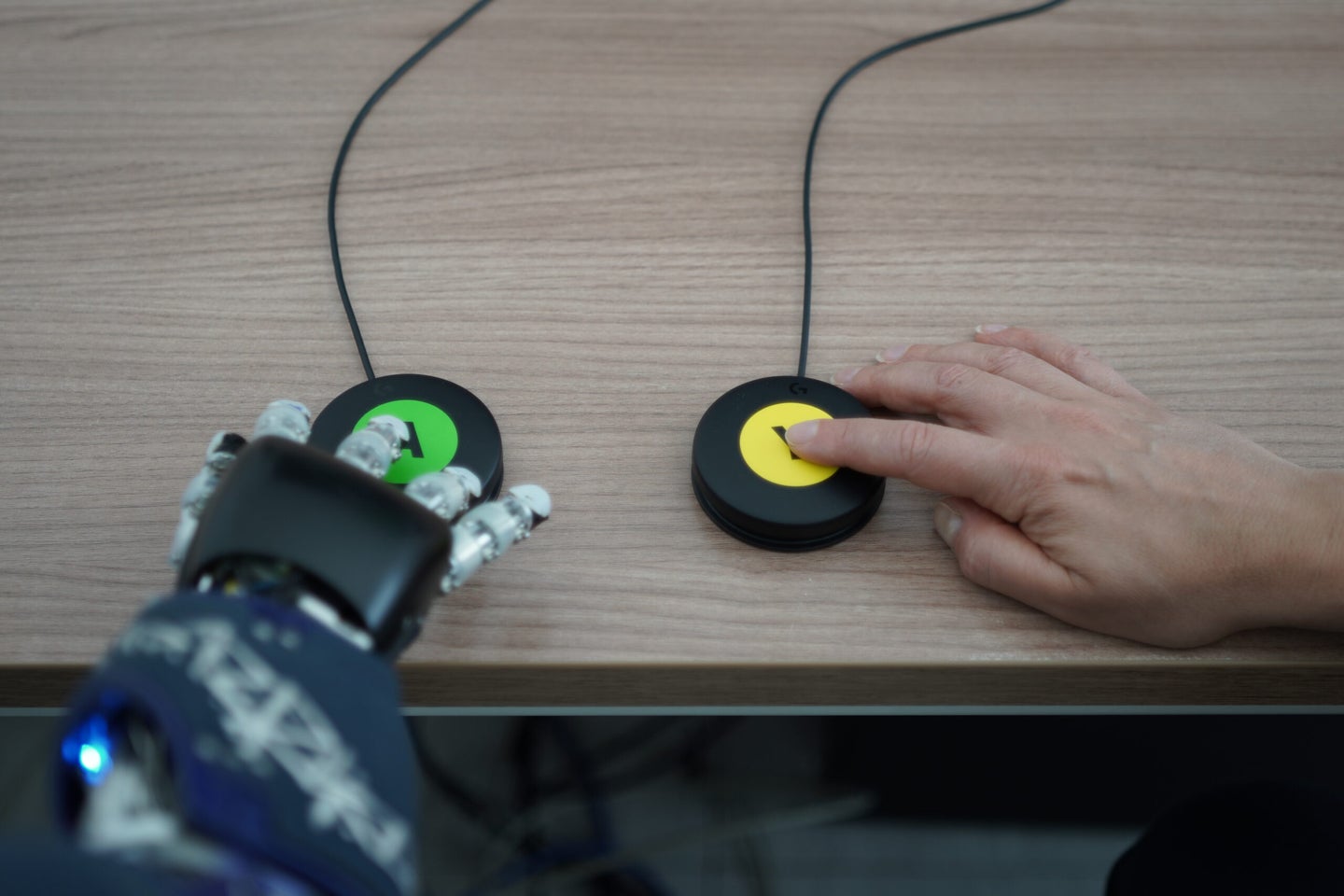

Researchers from the Italian Institute of Technology have trained an artificially intelligent computer program to pass a nonverbal Turing test. By behaving like humans would, such as changing up its reaction times while participating in an interactive shape and color-matching game, the AI was able to hide its true identity from people. The related study was published this week in the journal Science Robotics.

A Turing test is a standard used by computer scientists to determine whether a machine can display a convincing enough level of human-like intelligence to fool another human into believing that it too, could be human. This is done usually through a version of “the imitation game.” The game works like this: There are three players in total. One player is unable to see the other two players but can communicate with them by asking questions or interacting with them to determine which of the two players is human and which is machine. A computer passes the Turing test when the human spectator can’t distinguish between the answers of the human versus the computer.

“Given how our brains are tuned to detect very subtle and implicit signals of human-like behavior, it is worth asking whether a humanoid robot can ever pass the nonverbal version of the Turing test by embodying human characteristics in its physical actions,” the researchers wrote in the study.

[Related: Unpacking Google’s ‘sentient’ AI controversy]

In their experiment, they put a human and a robot together in a room. Both the human and the robot had to observe colored shapes on a screen. Each participant was in charge of responding to one color only. For example, one participant could be tasked with pressing their button only when a red square appears on the screen, and the other participant could be tasked with pressing their button only when a green square appears on the screen. But here’s the twist: The robotic teammate was remotely controlled, sometimes by a human and sometimes by AI. The AI took into account the time its human teammate responded and randomly varied its reaction time between seeing the shape and pressing the button to appear more human.

After running through the game together, the human participants were asked to guess whether their robot teammate was run by another human or by the AI-powered computer program. While people could usually tell when another human was behind the robot, they had trouble when it came to the AI, and guessed wrong more than 50 percent of the time. “Our results suggest that hints of humanness, such as the range of behavioral variability, might be used by observers to ascribe humanness to a humanoid robot,” the researchers wrote. “This provides indications for robot design, which aims at endowing robots with behavior that can be perceived by users as human-like.”

This is not the first time a machine has passed the Turing test. In a 2014 event organized by the University of Reading, a computer program convinced one-third of the human judges at the Royal Society in London that it was a 13-year-old boy. And just last month, one of Google’s AI also passed a version of this test, igniting controversies over the ethics of these types of programs. Many scientists, though, have noted that while passing the Turing test is a meaningful milestone, due to inherent flaws in the test’s design, it cannot be used to measure whether machines are actually thinking, and therefore cannot be used to prove true general intelligence.